From what was once just a supplier of graphics to the PC gaming industry, Nvidia has evolved to be the enabler of just about every computationally intensive field - artificial intelligence, data science, gaming, robotics, autonomous vehicles, metaverse, edge computing, crypto mining - and the list goes on. It is probably no exaggeration to say that it is one of the most important companies in the world. Given the immense scope of this company, I will cover it over the course of four articles:

Part 1: Overview of GPU technology, overview of Nvidia, deep dive into its Gaming segment

Part 2: Data Center segment including Nvidia’s use cases in AI and high-performance computing

Part 3: Omniverse - Nvidia’s play on the metaverse, and the Automotive segment

Part 4: Valuation and forecasts, and summary

Disclaimer: I am long Nvidia, and this article is not investment advice nor a substitute for your own due diligence. The objective of this article is to help formalise my thinking on the stock and hopefully provide some interesting insights on the business.

WHAT ARE GPUs?

There are two primary chips that perform computational functions for computer systems - the central processing unit (CPU), and a graphics processing unit (GPU). The CPU is the brain of a computer which processes a wide range of complex functions very fast and sequentially, i.e. one command, or “thread”, at a time. It has a few heavyweight processing units (or cores) with high clock speed1, and its primary objective is latency-optimisation - executing tasks with speed. A CPU is capable of executing tasks in parallel by switching from one thread to another while waiting for resources to be freed up - a process called ‘hyper-threading’. However, there are limits to how many tasks can be hyper-thread due to the limited number of cores as well as cache memory.

A GPU2 is a specialized processor with thousands of cores, each one much less powerful than the cores of the CPU, but together they can process many commands simultaneously all at once. This parallel processing is particularly effective for computationally intense tasks. GPUs are effectively an extra muscle to assist the CPU in doing computationally intense instructions that a CPU can’t do on its own. The goal of GPU computing is to offload parallelized workloads from the CPU, leaving the CPU to run the operating system and traditional PC apps.

Parallel processing makes GPUs very effective at rendering 3D graphics. 3D models are stitched together from millions of triangles (pixels), plotted together using 3D matrix arithmetic. When a character is moved about in a video game, each of these individual triangles has to be re-calculated for size, lighting and shading. This has to be done for each individual frame, which has to happen extremely quickly in order for the user to have a smooth experience - normally at least 60 frames per second. These calculations are computationally intense, however a GPU’s many-core structure is very effective at performing these repeat operations at a fast pace. Because calculations associated with each pixel can be done independently of other pixels, one GPU core can be utilized for a single pixel or a small cluster of pixels and operate independently of other cores.

The move from graphics to general purpose computing

At some point around early-mid 2000s, developers realized that the GPU’s parallel processing structure can be utilized not just for graphics, but for computations involving any large dataset. In particular this applies to mathematical operations such as matrix multiplication. As a result, GPUs are increasingly being used for use cases such as complex modeling, research, data analytics, crypto mining, and of course artificial intelligence. These types of applications power the modern world and scientific research, and as a result they now dwarf the original graphical use case of GPUs.

For several decades the exponential increase in the number of transistors that could fit on a chip based on Moore’s Law meant that CPU performance kept continuously improving at a fast rate. However, in recent years semiconductors have been coming up against the laws of physics, and it is becoming increasingly difficult and costly to keep squeezing more transistors onto a chip. As such, advances in traditional CPU-based approaches have slowed. In its place, GPU-based ‘accelerated computing’ has come to the center stage, delivering performance improvements on a pace ahead of Moore’s Law. Essentially, Moore’s Law lives through Nvidia.

There are two primary types of graphics chips – integrated and discrete (also known as "dedicated" graphics). Integrated graphics are typically integrated in a chipset on the motherboard or on the actual CPU die (the latter is most common) and thus share the system's memory. A discrete graphics card is completely separate from the CPU, and has its own memory that is used exclusively for graphics processing, thus improving the performance of the overall system. For instance, if your desktop computer has an Nvidia GeForce RTX 2060 graphics card with 6GB of video memory, that memory is entirely separate from your computer’s 16GB of system memory. As a result, discrete graphics cards are typically more expensive than integrated graphics cards and serve more high-end use cases.

Nvidia primarily sells discrete graphics cards and embedded systems-on-a-chip (SoCs)3. Intel and AMD primarily sell integrated graphics given that they are primarily CPU suppliers (although AMD’s discrete graphics card business is the next largest competitor to Nvidia - more on competition later). While Intel and AMD’s integrated graphics solutions have been improving over time and are good enough to run some basic 3D games and flash, they do not come anywhere close to rivaling Nvidia’s dedicated GPUs in being able to handle the majority of the top game titles, HD video and streaming media.

OVERVIEW OF NVIDIA

Nvidia is a fabless chip designer specialising in GPUs, of which it is the world’s largest supplier. It does not directly manufacture wafers, instead utilising other companies that specialize in the various stages of chip development – the bulk of its wafers are manufactured by semiconductor manufacturing giant TSMC, but it also uses Samsung as well, and a range of other service providers for the assembly, testing and packaging.

Nvidia was co-founded by current CEO Jen-Hsun “Jensen” Huang in 1993, and began life as a PC graphics supplier. Back then it was one of almost a hundred largely undifferentiated startups trying to get a slice of the emerging market for graphics chips. Through some fortunate ‘bet the company’ strategic decisions by Jensen and pure brute force engineering, Nvidia managed to produce graphics chips with the best performance and the shortest inventory cycle in the industry. This allowed it to stay ahead of the competition and capture significant share. In 1999 it introduced and coined the term GPU with the launch of the Nvidia GeForce 256. This card was a major achievement as for the first time it allowed all parts of the graphic pipeline to be shifted from the CPU to the GPU. The market for GPUs really started to take off, and in subsequent years Nvidia innovated its way to being one of two surviving graphics chip companies in the industry. The other was ATI, which was acquired by AMD in 2006 and formed the foundation of their discrete GPU business which is the key competitor to Nvidia4.

In 2006 Nvidia launched the first GPU for general-purpose computing, which in conjunction with a CPU could accelerate analytics, deep learning, high-performance computing, and scientific simulations. Until that point, one of the issues with GPUs which made them difficult to use outside of gaming was that unlike CPUs, they were difficult for developers to program on and manipulate. To enable programmability of GPUs, in 2006 Nvidia introduced Compute Unified Device Architecture (CUDA), a proprietary software programming layer for use exclusively with Nvidia's GPUs. CUDA provided the libraries, the debuggers and APIs which made it a lot easier to make GPUs programmable. CUDA was backwards compatible with the hundreds of millions of Nvidia GPUs that were out there, and it achieved wide adoption as Nvidia consistently invested in the ecosystem. CUDA has created a significant moat around Nvidia particularly in the data center (to be covered more in Part 2).

It’s remarkable that a technology that began life for one purpose could at some point in the future find uses cases with a market exponentially larger than the first. Since the release of its first CUDA-bundled general-purpose GPU in 2006, Nvidia has grown revenue at a CAGR of 16% as it diversified into many other use cases and end markets beyond gaming. Now Nvidia segments its business along four business lines: Gaming, Data Center, Professional Visualization, and Automotive.

Across its different segments Nvidia leverages its foundational GPU technology in various configurations adapted to their specific end markets. For instance, in its Gaming segment it sells GPUs through its dominant GeForce brand of graphics cards. In its Data Centers segment, which serves cloud and enterprise customers doing intense AI workloads and high-performance computing, it sells DGX - a data center system that is both a server and a supercomputer made up of multiple GPUs and a AMD CPU. In its Automotive segment for self-driving cars it sells smaller types of supercomputers called AGX, which is a combination of two SoCs and two GPUs.

Nowadays however, Nvidia thinks of itself as not just a hardware company, but a full stack computing company, providing the hardware, the operating systems like CUDA that sit on top of it, software platforms such as AI Enterprise and Omniverse, and domain-specific applications for a whole host of industries and use cases. As it moves more ‘up the stack’, software is becoming a bigger focus, with the company now having more software engineers than hardware. It has a growing ability to monetise software both bundled with its hardware and separately. I think the below quote from CFO Colette Kress at a recent investor presentation perfectly summarises the evolution of Nvidia over time.

“We started as a gaming company selling chips, but a lot has changed since then. We've focused on platforms. We've focused on systems, not just for overall gaming, but for the data center, for workstations and now even focused on automotive.

But now we're entering into a new phase, a new phase that we are thinking about software and a business model for software to sell separately. Now software is incorporated in all of our systems and platforms today. What fuels so much of our data center focus on AI and on acceleration is the help that we have done in providing both a development platform and software, SDKs and others for that. But now we have an ability to monetize separately, a great business model for us and a great ability for us to expand our reach for many of the enterprises that we are working with in our data center business.”

Colette Kress (CFO), Morgan Stanley TMT Conference 2022

By integrating across the full stack from hardware to software, Nvidia claims to have achieved a compound effect of accelerating computing by one million times over the past decade.

A lot of the above elements will be covered throughout Parts 2 and 3 as they relate to Data Center, Auto and Professional Visualisation segments of Nvidia. First, we begin with a deep dive into the Gaming segment.

GAMING SEGMENT (46% OF REVENUE)

In part articles I have written extensively about how gaming is a structural growth sector growing faster than every other entertainment medium (Take-Two, Garena). One of the key drivers for this growth is continuous technological advancement, which makes games look more realistic and immersive. As games become more graphics intensive, there is a never-ending arms race for gamers to get the best performance and visual experience. Other trends that underpin the sector’s growth include the popularization of free-to-play business models, e-sports, streaming, and the metaverse (AR/VR experiences). Nvidia’s GeForce is the dominant brand in PC gaming GPUs, and plays a central role in enabling all of this.

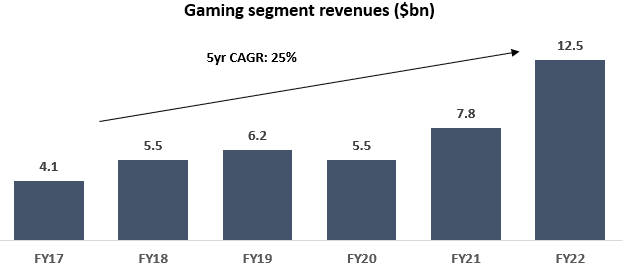

Gaming is currently the single largest segment of Nvidia, making up $12.5bn revenue (or 46%), growing at a historical 5-year CAGR of 25%, and provides a solid base for the business. FY22 (which is predominantly CY21 given Jan-YE) was in particular a record as a result of the huge boon in gaming driven by the pandemic and the ramp of its latest GeForce RTX 30 series (more on this later).

The primary end market for this segment is desktop and laptop PCs, with gamers either purchasing Nvidia GeForce graphics cards directly and building their own DIY gaming rigs, or purchase dedicated gaming desktops/laptop with pre-installed Nvidia GeForce cards. Desktops GPUs are estimated to be around 60% of this segment, while laptops are around 30%, however they are experiencing faster unit growth than desktops (5-year CAGR of 34%) as high-end gaming laptops are fast becoming popular. The rest of the segment is made up of its revenues from Nintendo Switch (which is built on Nvidia’s Tegra SoC5), its multimedia device SHIELD, as well its cloud gaming service GeForce NOW (more on this one later).

Given how important PCs are to this segment, an obvious concern that comes to mind is the decline of PC sales and the long-called death of PC gaming. While it is true that overall consumer PC sales have been declining over the last decade, there is an interesting bifurcation happening where the much smaller segment of high-end gaming PCs is actually growing at a healthy rate - and this is the market that Nvidia’s discrete GPUs mainly serve. Unlike other consumer technology segments where performance can get saturated, PC gamers are always pushing the technological boundary. It is practically inevitable that games five years from now will look better than games today, and thus hardware needs to constantly go through upgrade cycles.

Similarly, the death of PC gaming never really came to pass, and the number of PC gamers has been steadily growing over time, fuelled in recent years by social games like Fortnite which have entered the mainstream of culture, and e-sports which is rising in popularity. According to Statista there are currently 1.8bn PC gamers in the world.

All of these industry tailwinds bode well Nvidia, who’s GeForce cards have undisputed dominance in PC gaming GPU market share - consistently over 80%.

It is important to note that not only have Nvidia GPU unit sales been growing, but average selling price (ASP) has been on a continuous upward climb. Nvidia’s Gaming 5-year revenue CAGR of 25% is made up of unit growth of 11%, and ASP growth of 13%. The key driver of this strong price increase are new generations of GPU architectures that Nvidia launches every few years.

GPU architectures

Every 2-3 years, Nvidia releases a new chip architecture (named after famous scientists) which improves performance and introduces new technological features. The below table shows the last few GPU architectures, their key specs and price points.

As can be seen, with every new cycle it has been able to take advantage of TSMC/Samsung’s progression in commercializing smaller process nodes6, allowing more transistors to fit on a chip, and thus more cores on a GPU. GPU processing power is directly proportional to the number of cores it has - the more cores, the more computations can be done in parallel. Its latest Ampere architecture which powers the GeForce RTX 30 series of cards, has more than double the cores and nearly 4x the transistors of the Pascal architecture from 5 years ago. It delivers twice the performance and power efficiency of its predecessor Turing. Further, this year Nvidia will likely announce its next generation Lovelace architecture with the GeForce 40 series, based on TSMC’s 4nm or 5nm process node, which is expected to be 2x the performance the Ampere GeForce 30 series.

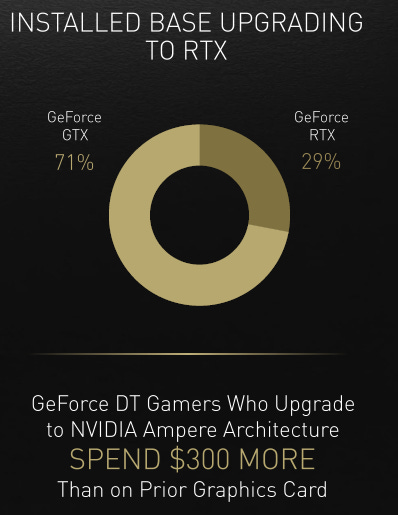

The other notable point is increasing ASP with every generation. Performance-hungry consumers have generally accepted that they have to pay up to have continuously advancing graphics technology. With the aforementioned decline in Moore’s Law, new generations of wafers with higher transistor density are getting costlier to manufacture, and TSMC has to pass some of that cost onto its customers like Nvidia, who in turn have to charge more to protect their margins. However, Nvidia also has significant pricing power in its own right due to the strength of the GeForce brand and its ability to produce large leaps in performance and new innovative features with every generation of cards. According to the company, gamers who upgrade to the Ampere cards spend on average $300 more than on prior graphics cards. This is how Nvidia gaming has been able to drive such strong topline gaming growth in contrast to the rest of the PC hardware industry.

With every new cycle, Nvidia seems to break new records. Its current Ampere architecture is seeing 2x the adoption of its predecessor Turing even at its higher price point.

Two innovations that made the Turing and Ampere architectures particularly ground-breaking are worth discussing - ray-tracing and deep learning super sampling.

Ray-tracing (RTX) and Deep Learning Super Sampling (DLSS)

Ray-tracing, which is what the notation RTX in GeForce cards stands for, is the new gold standard in computer graphics. It was pioneered by Nvidia, first featured in their Turing architecture six years ago, and now being adopted by the entire gaming industry.

Until recently, 3D images were created through a process called rasterization, which essentially involves meshing 3D models onto a 2D screen, ‘guessing’ which objects will be visible on the screen and where their shadows and reflections will be cast, and then applying textures and shading to the pixels accordingly. Rasterization is now being replaced by a more advanced and math heavy technique called ray-tracing. Ray-tracing essentially involves casting a bunch of rays from the camera, through the 2D image (your screen) and into the 3D scene that is to be rendered. As the rays propagate through the scene, they get reflected, pass through semi-transparent objects and are blocked by various objects thereby producing their respective reflections, refractions, and shadows. This gives rise to stunningly detailed and photo-realistic images that are dynamically rendered in real-time.

In addition to RTX, Nvidia introduced Deep Learning Super Sampling (or DLSS)7 in its Turing cards - an AI-based image upscaling tool that boosts frame rates while generating beautiful, sharp images for games. It does this by ‘filling in’ missing pixels from every frame, effectively lifting the resolution of an image while only needing to render a fraction of the pixels. Think of it as being able to take a 1440p game, and reconstructing it as 4K. To do this, DLSS takes advantage of new Tensor Cores that were introduced to Nvidia GPUs. Tensor Cores are specialised units designed specifically to accelerate the performance of machine learning algorithms, and are one of the key ingredients to Nvidia’s success in data center-fueled AI applications (more on this in Part 2).

Together, RTX and DLSS created a giant leap forward in terms of graphics rendering. There are currently over 250 games that utilise RTX and DLSS technology, including the heavyweights in each genre such as Fortnite (battle royale), Call of Duty (first-person shooter), Cyberpunk 2077 and Red Dead Redemption 2 (open-world), and Minecraft (sandbox). Nvidia’s GPUs are the de facto hardware choice for powering all of the top game titles.

Further, the penetration of the more expensive RTX cards is still quite low - only at around 30% of the GeForce installed base, so the company sees significant runway to this upgrade cycle. On top of that, its upcoming Lovelace architecture with its GeForce 40 series will likely kickstart a further upgrade cycle over the next few years.

Cloud gaming: Geforce NOW

In 2020 Nvidia launched a cloud gaming service called GeForce NOW, which is part of the company’s broader efforts of trying to generate more ‘up-the-stack’ subscription-style revenues. GeForce NOW facilitates high-end PC gaming via GeForce GPUs in the cloud for $10 a month, although there is also a free tier and more premium tier as well. It’s similar in style to Google Stadia and Microsoft’s Xbox Cloud Gaming, where you have to purchase the games separately to stream them. The target market for this are gamers who lack the necessary hardware to play the latest games. GeForce NOW boasts more games than any other service, and has 10m subscribers - as seen below, the majority are those with underpowered PCs or laptops.

The TAM for cloud gaming is potentially huge, with billions of people with low-end PCs or mobile-only who could be potential gamers. However, as we’ve seen with the limited success of Google Stadia, we need to temper our expectations around adoption of cloud gaming. One of the key issues is that people who want to play the more hardcore AAA titles typically prefer to own their hardware and games for maximum performance, and the casual gamers who play on mobile are probably not the right target market. The challenge for cloud gaming is that it appears to be an offering that mostly makes sense for casual gamers, yet geared for games that appeal to hardcore gamers. Having said that, GeForce NOW seems to be seeing decent traction with 10m members, although it’s unclear how many of these are paying members.

Broader investments in software and the gaming ecosystem

To further differentiate its hardware, Nvidia has done a really good job of investing in software and the broader gaming ecosystem. Software is something that is engrained in the company’s DNA from its invention of the very first programmable pixel shader in 2001. Several years ago it invested in an e-sports laboratory staffed by Nvidia research scientists dedicated to understanding player performance. The results from this work led it to launch Nvidia Reflex, a software plus hardware solution which helps reduce latency in competitive games. In the case of Blizzard's Overwatch, Reflex reduced system latency by 50%, and now 8 of the top 10 competitive shooters have integrated Reflex.

It also launched Nvidia Broadcast, an app that can turn an room into a broadcast studio with the help of AI-enhanced voice and video. While the benefits of this to game live streamers are clear, there is also broader use here for work conferencing. I personally haven’t used the app but Brad Slingerlend from NZS Capital wrote up good review of the it in his recent newsletter.

These applications, along with a host of other solutions such as G-Sync, GeForce Experience, and Game Ready Drivers, have allowed Nvidia to add even more value to gamers and developers, further entrenching its position in the gaming industry.

Nvidia’s dominance over AMD looks to have staying power

As mentioned earlier, AMD’s acquisition of ATI has given them a decent presence in the discrete GPU market, which it mainly serves through its Radeon brand of GPUs. While Radeon is generally well regarded in the market, Nvidia has maintained a consistent lead over them - its market share over the last 10-15 years has been in the range of 60-80%, and increased above that in recent years. Nvidia’s lead is despite AMD’s cards oftentimes being comparable in terms of performance and perhaps cheaper in price in certain categories. Nvidia however has managed to build a very strong brand loyalty to GeForce through continuous innovation (such as being the pioneer in ray-tracing) and investment in the overall gaming ecosystem. And this edge is likely to continue as Nvidia’s annual R&D budget of $5.3bn is almost double that of AMD, who’s R&D efforts are split across both GPUs and CPUs.

There is a bit of hype around AMD’s upcoming RDNA 3 card based on TSMC’s 5nm process node, with some saying that it may outcompete Nvidia’s upcoming Lovelace architecture GeForce 40 series (yet to be announced). Time will tell, and there is definitely a risk that a more resurgent and focused AMD may gain share, however when we look at past episodes of new product launches from AMD, any market share gains that it won were quickly ceded back to Nvidia. For all of the above reasons I’m confident that Nvidia will maintain its dominant position over the long-term.

SUMMARY

A quick summary of the key takeaways from this article:

GPU’s parallel processing architecture allows it to perform multiple calculations across streams of data simultaneously. This is highly effective not just for rendering graphics, but for all computationally intense workloads, including artificial intelligence, machine learning, and data science

Nvidia started off as a supplier of graphics to the PC gaming industry, but has since then evolved into general purpose GPU computing with a number of fast growing end markets - namely AI and high-performance computing. It is now evolving to become a full stack computing company with a growing software business

Gaming is still currently the largest segment of Nvidia (46% of revenue), and is underpinned by strong secular trends. Unlike the overall consumer PC market, high-end gaming PCs are still growing by double digits and gaming laptops are growing even faster

Through continuous innovation (such as RTX and DLSS), and investments in software and the gaming ecosystem, Nvidia has consistently managed to differentiate its hardware. The GeForce brand has become the undisputed market leader with over 80% market share. Even though there is a risk that a more resurgent AMD may gain share, as it stands, it looks like Nvidia’s dominance isn’t going to disappear quickly

Nvidia’s current Ampere architecture which powers the GeForce 30 RTX series is seeing the fastest adoption in the company’s history, and with only 30% penetration the growth runway is still large. Further, this year it will likely announce its new Lovelace architecture with the GeForce 40 series - expected to be 2x the performance of Ampere - which should kickstart a further upgrade cycle over the next few years.

In the next article I will dive into its Data Center business, which covers Nvidia’s business in enabling cloud AI, enterprise AI, high-performance computing, edge computing, and its growing software platform.

Thank you for reading and hopefully you found the article helpful. I welcome all feedback, good or bad, as it helps me improve and clarifies my thinking. Please leave a comment below or on Twitter (@punchycapital).

If you like this sort of content please subscribe below for more.

For further reading / info on Nvidia and the industry:

The global semiconductor value chain

Moore's Law Is Dead - YouTube channel for all things chips and graphics cards

Asianometry’s YouTube videos on Nvidia:

Acquired podcast episode on Nvidia

Liberty RPF’s newsletter Liberty’s Highlights has great ongoing coverage of Nvidia

Stratechery interview with Jensen Huang

Shoot To Kill (Forbes article)

Nvidia company presentations and materials (which are excellent):

CPUs typically have 4-8 cores, which are the execution units of the CPU. The clock speed of a core is a measure of the number of cycles a CPU executes per second, measured in GHz (gigahertz). A “cycle” is essentially the basic unit that helps you understand a CPU's speed.

Graphics cards vs. GPU: While the terms are often used interchangeably, the two are not actually the same. A GPU is a component of the graphics card, albeit the most important one.

A system-on-a-chip integrates a CPU, GPU and memory on a single integrated circuit. SoCs are used primarily in multimedia and mobile devices, a market that Nvidia hasn’t traditionally been strong in, although they are getting increasing use in autonomous vehicles.

The early history of Nvidia is truly fascinating, and for those who wish to dive into the details I highly recommend Acquired podcast’s Nvidia episode, as well as Asianometry’s YouTube videos (see list of resources above).

Nintendo Switch is powered Nvidia’s Tegra SoC integrates an ARM CPU with a Nvidia GPU onto a single package. Other than powering Microsoft’s first Xbox and the Switch, Nvidia has not been featured in any other console - AMD powers both the Playstation and Xbox consoles.

The nomenclature 7nm/5nm etc in semiconductors simply refers to new generations of semiconductor chips which shrink down the transistors, allowing companies to pack more of them into a given area on the silicon. Increased transistor density means increased processing power and speed.

Prior to Deep Learning Super Sampling (DLSS), traditional super sampling could be done by having the game run at a higher resolution than what was displayed on your monitor - a feature called Anti-Aliasing. So while your monitor could be on 1080p, the game could be running in 4K. This had the effect of smoothing out jagged edges and creating crisp images, but doing so required heavy duty graphics cards. By utilising AI machine learning, DLSS allows you to achieve the same effect of a higher resolution without needing the beefy hardware.

Really enjoying these deep dives. Thanks for taking the time to share your work!

Loved it.